Shadows2Height: Inferring the height map of aerial images

The motivation: The height map of the objects depicted in an image is unarguably a highly valuable source of information in remote sensing and Geospatial/Earth observation applications. Such information could easily facilitate inference on complex tasks involving but not limited to tree monitoring, 3D visualizations of structures and policymaking. Heightmaps can also be used for solving interesting remote sensing problems by exploiting data fusion and multi-modal information.

The method: We developed a Deep Learning model [1] that infers the height map of aerial RGB images without requiring any other auxiliary information. The model learns the geometry of the landscapes in the training dataset, and the features of the structures and objects and builds a height map of the image. We trained our model with aerial images of a large area in Manchester, UK and the campus of the University of Houston, US and its surroundings, covering a total area of 25 sq. km. The imagery used has a resolution of 0.2 m/pixel while the ground truth height maps have a resolution of 0.5 m/pixel.

The results: We evaluated our model with Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) for which we achieved test errors of 0.59 m and 1.4 m respectively for the Houston area and 0.78 m and 1.63 m respectively for the Manchester area. These results exceed state-of-the-art results by a significant margin. To achieve these results, we fused some novel architectural features and some components used by models that achieve very good results on the task, into a single model.

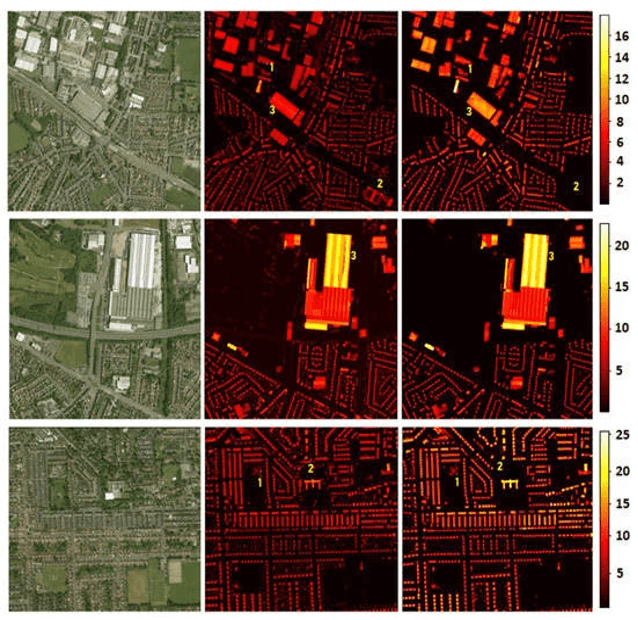

Figure 1. The original aerial image is shown on the left and the inferred height map is shown on the right. Also, a color bar associates the height in meters with the colors shown in the height map.

Interestingly, we observed that frequently, the model avoids the errors occurring in the ground truth height maps created by LiDAR technology like spurious/extreme and incomplete measurements. These problems occasionally occur in LiDAR measurements because of some peculiarities in the acquisition process. By avoiding these problems, our model achieves very good results and establishes itself as a promising approach to obtaining the height maps of landscapes that may contain reflections (which is a big problem for LiDAR technology), peculiar architectural shapes and relations or partial structure occlusion.

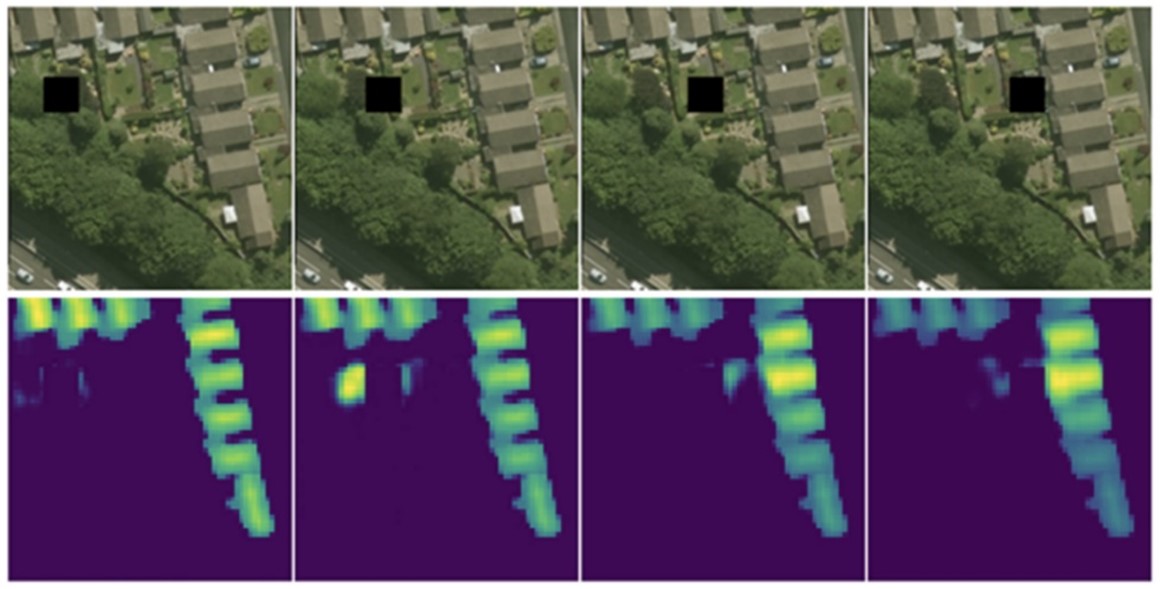

The perspective: Another interesting property we observed in our model is that it partially relies on shadows to predict the height maps. The length of a shadow depends on the sun’s azimuth and the height of the structure causing it. We simulated shadows around objects by sliding a black square on the input image and observed the way the model modifies its height prediction of objects near the simulated shadow: the model systematically tends to predict higher values of height by interpreting that the fake shadows imply there are high structures around them.

Figure 2. Sliding a black square on the aerial image simulates the presence of shadows caused by nearby structures/objects. The model is fooled to predict higher values for the nearby objects.

We plan to take advantage of this observation and reinforce the reliance of the model on shadows to make the model robust to data domain shifts, e.g., using the same model to predict the height maps of areas not used in the training set and possibly have different architectural features (e.g., very different building types) or depict entirely different objects (e.g., tree species, power line structure, bridges, etc.).

[1] Savvas Karatsiolis, Andreas Kamilaris and Ian Cole, IMG2nDSM: Height Estimation from Single Airborne RGB Images with Deep Learning, In Remote Sensing Journal, vol. 13, no. 12, pp. 2417, June 2021.